LSI 3ware 9690SA-8I SAS RAID Controller Review

We continue testing RAID controllers on a platform with SAS drives. Today we are going to talk about an 8-port SAS/SATA RAID controller from LSI 3ware family. Read more in our review.

We continue our tests of RAID controllers on our new testbed that features high-performance hard disk drives with SAS interface. In this review we will discuss the best controller from the highly popular 3ware product line manufactured under the guidance of AMCC (as a matter of fact, Applied Micro Circuits Corporation consumed 3ware back in 2004). Now, however, there is a new owner of the 3ware product line: LSI Corporation recently (April 6th) acquired the 3ware RAID adapter business of AMCC.

We will check out if the architecture of the LSI 3ware 9690SA controller can cope with eight modern 15,000rpm hard disks.

Closer Look at LSI 3ware 9690SA

The 3ware 9690SA is the only series of RAID controllers from LSI 3ware family that supports hard disk drives with SAS interface. Other 3ware controllers support SATA and the 7506 series even supports PATA. The 9690SA series offers four models differing in what connectors they have. The 8I model we have got features two internal SFF-8087 connectors each of which provides up to four SAS ports. The -8E model has two external SFF-8088 connectors, and the -4I4E has one internal and one external connector. And finally, the -4I model is equipped with only one internal port.

Otherwise, the controllers in the series are all identical. They are all based on the same processor, equipped with 512 megabytes of DDR2 SDRAM clocked at 533MHz (desktop PCs used to have that much system memory just a few years ago!), and have PCI-Express x8 interface.

Interestingly, the controller’s main processor is the same as in the 3ware 9650SE we discussed in an earlier review. The marking differs in the serial number: AMCC Power PC405CR. 3ware still does not specify the processor clock rate but, judging by the marking, it must be 266MHz. This is not a high frequency if you compare it with competitor products. For example, the Promise SuperTrak EX8650 controller we tested earlier (by the way, you may want to read that review in order to understand this one better – we will be referring to it often) has a processor frequency of 800MHz, and the senior model from the same SuperTrak series has a processor frequency of 1200MHz. On the other hand, the 3ware controller’s processor is architecturally different from the popular Intel XScale, making direct comparisons incorrect.

Besides the controller, the box contains a brief user manual, a disc with drivers and software, a back-panel bracket to install the controller into low-profile system cases, and two cables.

The cables are CBL-SFF8087-05M for connecting racks with SFF-8087 connectors. If you want to connect HDDs directly to the controller, you must use CBL-SAS8087OCF-06M cables that end in four disk connectors.

The controller supports nearly every popular type of a RAID array you can build with four HDD ports, namely:

- Single disk

- RAID0

- RAID1

- RAID5

- RAID6

- RAID10

- RAID50

RAID60 is missing here (it is a stripe of two RAID6 arrays), but that’s not a big loss. Most users will prefer to complement fault tolerance with either higher capacity (RAID6) or higher speed (RAID10).

In our previous test session the Promise EX8650 refused to enable deferred writing without a backup battery unit, which affected its writing performance greatly. Therefore we tried to find a BBU for the 3ware controller’s cache even though the controller allowed to force deferred writing on even without one. We just did not want to take any risk that time around.

As opposed to 3ware’s previous controller series, the 9690SA supports a new type of BBUs called BBU-Module-04. Such batteries can be used together with 9650SE and 9550SXU controllers, too. The difference is obvious: the BBU is now installed not on the controller but on a separate card that occupies another slot in your system case. It is not actually plugged into a mainboard’s expansion slot, but takes the place above an expansion slot that has an appropriate bracket in the back panel. The BBU is connected to the controller with a cord and a special tiny daughter card that is fastened with two plastic poles to the controller and communicates with the latter via a special connector.

The controller is still managed in two ways: via BIOS or through the exclusive OS-based 3DM application. There are versions of 3DM available for Windows, Linux and FreeBSD in both 32-bit and 64-bit variants.

Testbed and Methods

The following benchmarks were used:

- IOMeter 2003.02.15

- WinBench 99 2.0

- FC-Test 1.0

Testbed configuration:

- Intel SC5200 system case

- Intel SE7520BD2 mainboard

- Two Intel Xeon 2.8GHz CPUs with 800MHz FSB

- 2 x 512MB PC3200 ECC Registered DDR SDRAM

- IBM DTLA-307015 hard disk drive as system disk (15GB)

- Onboard ATI Rage XL graphics controller

- Windows 2000 Professional with Service Pack 4

The controller was installed into the mainboard’s PCI-Express x8 slot. We used Fujitsu MBA3073RC hard disk drives for this test session. They were installed into the standard boxes of the SC5200 system case and fastened with four screws at the bottom. The controller was tested with four and eight HDDs in the following modes:

- RAID0

- RAID10

- Degraded RAID10 with one failed HDD

- RAID5

- Degraded RAID5 with one failed HDD

- RAID6

- Degraded RAID6 with one failed HDD

- Degraded RAID6 with two failed HDDs

A four-disk RAID6 is not present because 3ware blocks the opportunity to build such an array in the BIOS and driver. We don’t like the developer’s position on this point. On one hand, it is clear that a four-disk RAID10 is going to be faster while delivering the same useful capacity. But on the other hand, a four-disk RAID10 offers lower fault tolerance than a four-disk RAID6. A four-disk RAID10 can only survive a failure of two disks if these disks belong to the different mirrors whereas a four-disk RAID6 is free from this limitation and can survive a failure of any two disks. It would be good if the user had the choice between maximum fault tolerance and speed.

As we try to cover all possible array types, we will publish the results of degraded arrays. A degraded array is a redundant array in which one or more disks (depending on the array type) have failed but the array still stores data and performs its duties.

For comparison’s sake, we publish the results of a single Fujitsu MBA3073RC hard disk on an LSI SAS3041E-R controller as a kind of a reference point. We want to note that this combination of the HDD and controller has one problem: its speed of writing in FC-Test is very low.

We used the latest BIOS available at the manufacturer’s website for the controller and installed the latest drivers. The BIOS and driver pack was version 9.5.1.

Performance in Intel IOMeter

Database Patterns

In the Database pattern the disk array is processing a stream of requests to read and write 8KB random-address data blocks. The ratio of read to write requests is changing from 0% to 100% (stepping 10%) throughout the test while the request queue depth varies from 1 to 256.

We’ll be discussing graphs and diagrams but you can view the data in tabled format using the following links:

- Database, RAID1+RAID10

- Database, RAID5+RAID6

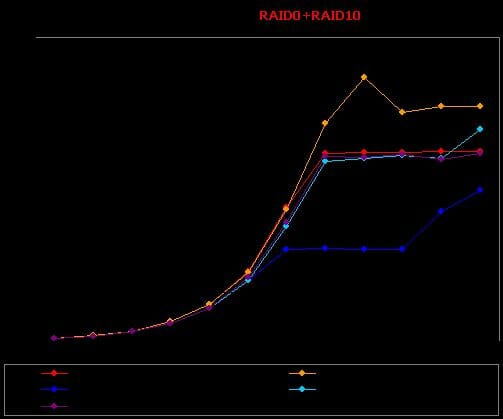

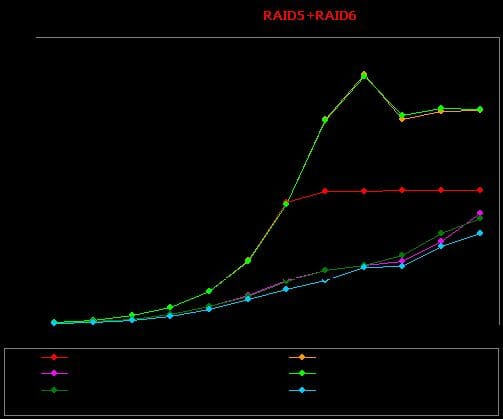

We will discuss the results for queue depths of 1, 16 and 256 requests.We will also be discussing the RAID0 and RAID10 arrays separately from the checksum-based RAID5 and RAID6.

Deferred writing is the only technique at work when the load is low, so we don’t see any surprises. It is good that the eight-disk RAID10 is almost as fast as the four-disk RAID0, just as the theory has it. The degraded RAID10 is also as fast as them, meaning that the failure of one disk does not lead to a performance hit.

The situation is more complicated with the checksum-based arrays. First of all, we have excellent results at high percentages of writes. RAID controllers usually slow down under such load because they are limited by the speed of checksum computations (and the related overhead in the way of additional read and write operations). We don’t see that here: the huge amount of cache memory and the thought-through architecture help the controller cope with low loads easily.

The RAID6 are expectedly a little slower than the RAID5 built out of the same number of disks. It is because not one but two disks drop out of the team work, having to process checksums. The one-disk-degraded RAID6 and RAID5 are good enough, but the latter slows down suddenly when doing pure writing. The degraded RAID6 with two failed disks (on the verge of failing completely) is sluggish.

When the queue depth is increased to 16 requests, the RAID0 and RAID10 just increase their performance steadily. The RAID10 are still as fast at writing as the RAID0 consisting of half the disks.

Note that at high percentages of reads the RAID10 arrays are much better than the RAID0 arrays built out of the same number of disks. This is normal because the controller can alternate read requests between the disks of each mirror depending on what exactly disk can perform the request quicker (its heads are closer to the requested location on the disk platter).

The degraded RAID10 is good, too. Its performance hit is directly proportional to the percentage of reads in the queue. This is easy to explain: it has only one disk in one of the mirrors, which affects the speed of reading, whereas writing is still done in the buffer memory and is not affected by the loss of one disk.

The RAID5 and RAID6 arrays do not feel at their ease here. Their graphs are zigzagging, indicating imperfections in the firmware. Take note that these arrays have only sped up on reads. The number of operations per second is the same at pure writing. Even highly efficient architectures have their limits, and we see this limit at pure writing. Anyway, the performance is very high compared to what we saw in our earlier test sessions.

Curiously enough, the eight-disk RAID6 is ahead of the RAID5 at pure reading. This is indicative of firmware problems with respect to the latter array type.

As for the degraded arrays, the RAID6 without one disk still maintains a high speed but the failure of a second disk just kills its performance. The degraded RAID5 has an inexplicable performance hit when it does pure writing.

There is nothing unusual with the RAID0 and RAID10 arrays when the queue becomes as long as 256 requests. Everything is just as expected. Perhaps the only surprising thing is the behavior of the eight-disk RAID0: graphs in this test usually sag in the middle where there is about the same amount of reads and writes, but the graph of that array has a rise at that area!

When the queue depth is very long, the graphs of the RAID5 and RAID6 arrays smooth out, making their performance more predictable. There are no surprising results here, and even the degraded RAID6 without two disks tries its best to catch up with the others. The degraded RAID5 without one disk acts up a little, slowing down too much at high percentages of reads.

Disk Response Time

IOMeter is sending a stream of requests to read and write 512-byte data blocks with a request queue depth of 1 for 10 minutes. The disk subsystem processes over 60 thousand requests, so the resulting response time doesn’t depend on the amount of cache memory.

The read response time of each array is somewhat worse than that of the single disk. The difference is negligible with the RAID10 arrays, though. The controller’s lag is made up for by reading from what disk in a mirror can read data quicker.

The other arrays are not so good here. Some of them are worse by over 1 millisecond, which is a lot considering that the read response of the single disk is only 6 milliseconds. There is no pattern in the behavior of the arrays: the RAID6 proves to be the best among the eight-disk arrays, the four-disk arrays being considerably slower. There is something odd with the degraded arrays. It is unclear why the RAID6 without two disks shows the best response time among them.

The write response time is determined by the combined cache of the array and controller, and the mirrors of RAID10 arrays must be viewed as a single disk. This rule does not work for the checksum-based arrays because they have to perform additional operations besides just dumping data into the buffer memory. Anyway, their write response time is good. It is lower than that of the single disk, meaning that the checksum calculation is done without problems and with minimum time loss.

The RAID6 without two disks has a very high response time, but it might have been expected due to the increased load on this degraded array. The controller has to “emulate” the two failed disks by reading data from the live disks and computing what data should be stored on the failed ones. The result is not terrible, though. The Promise EX 8650 had write response times higher than 20 milliseconds when working without a BBU!

Random Read & Write Patterns

Now we’ll see the dependence of the disk subsystems’ performance in random read and write modes on the data chunk size.

We will discuss the results of the disk subsystems at processing random-address data in two variants basing on our updated methodology. For small-size data chunks we will draw graphs showing the dependence of the amount of operations per second on the data chunk size. For large chunks we will compare performance depending on data-transfer rate in megabytes per second. This approach helps us evaluate the disk subsystem’s performance in two typical scenarios: working with small data chunks is typical of databases. The amount of operations per second is more important than sheer speed then. Working with large data blocks is nearly the same as working with small files, and the traditional measurement of speed in megabytes per second is more relevant for such load.

We will start out with reading.

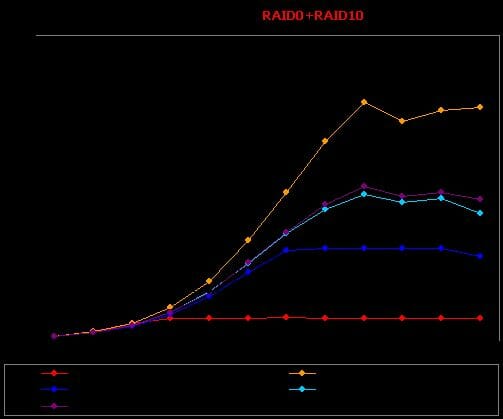

The two RAID10 arrays are beyond competition when reading data in small portions. Even the eight-disk RAID0 finds it impossible to beat their very effective reading from the mirrors. The degraded RAID10 feels good, too. Its performance hit is very small.

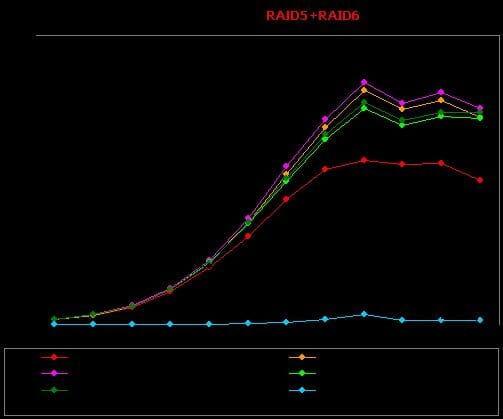

Data are read from the checksum-based arrays in the same way: every array is somewhat slower than the single disk. The degraded arrays, even the RAID6 without two disks, are but slightly inferior to the normal ones. It means that the data recovery from checksums is done on them without losing much speed.

When the data chunk size is very big, the higher linear speed of the RAID0 arrays shows up, helping them beat the same-size RAID10 arrays. The controller seems to be able to read from both disks in each mirror: otherwise, the eight-disk RAID10 would not be able to outperform the four-disk RAID0. The degraded RAID10 “realizes” that one of its mirrors is defective and reads from only one disk in each mirror.

Linear speed is the decisive factor for the RAID5 and RAID 6 arrays, too, when they come to process very large data blocks. As a result, the eight-disk arrays go ahead. The degraded arrays are expectedly slower than the normal ones: the performance hit is smaller with RAID5 and bigger with RAID6.

Now let’s see what we have at random writing.

The number of disks in the array and the total amount of cache memory makes the trick here. Take note of the nearly ideal scalability of performance depending on the number of disks. Note the shape of the graphs, too. This is actually an example of how they should look.

For the checksum-based arrays the number of disks is still important as is indicated by the results of the eight- and four-disk RAID5 arrays. However, the checksum calculations are a factor, too. This is the reason why the RAID6 (for which two rather than one checksum must be calculated) are slower than the RAID5.

The degraded RAID6 without two disks is terribly slow again. You should not let it degrade that much – it feels really better with only one failed disk.

The number of disks matters with large data blocks, too. A disk mirror must be viewed as a single disk here. The four-disk RAID0 has poor performance due to unexpected problems with large data blocks. The degraded RAID10 is impressively good: it is occasionally faster than the normal RAID10 of the same size!

It is the number of disks that’s important for the checksum-based arrays when processing large data chunks. There are no performance slumps here. All checksums are calculated at a high speed. The degraded arrays are good. They just do not “notice” that they lack one disk. The RAID6 without two disks is the only configuration to show poor performance, which is an obvious flaw in the controller’s firmware.

Sequential Read & Write Patterns

IOMeter is sending a stream of read and write requests with a request queue depth of 4. The size of the requested data block is changed each minute, so that we could see the dependence of an array’s sequential read/write speed on the size of the data block. This test is indicative of the highest speed a disk array can achieve.

The controller can obviously read from both disks in each mirror as is indicated by the characteristic increase in speed on large data blocks after the flat stretches of the graphs. The eight-disk RAID0 is somewhat disappointing, though. While the Promise EX8650 delivered a speed of over 900MBps, the 3ware 9690SA cannot give more than 700MBps – and only with 128MB data chunks. On the other hand, it is all right with the four-disk RAID0 and the horizontal stretch of the eight-disk RAID10’s graph: 450MBps is close to the performance of the single disk multiplied by four. So, these must be the peculiarities of the controller’s architecture.

The four-disk RAID5 and RAID6 arrays have a performance peak on 128KB data blocks, too, and slow down afterwards. Interestingly, every eight-disk array, save for the RAID10, has the same speed at the peak and with the largest data blocks: 650MBps and 550MBps, respectively.

The degraded arrays show a dependence of read speed on the size of the requested data chunk. This dependence must be due to the necessity of restoring data from checksums. Curiously enough, the same dependence holds true for the degraded RAID6 without two disks. The controller’s architecture seems to be able to effectively restore data from two checksums simultaneously with almost zero performance loss.

The eight-disk RAID0 is insufficiently fast at sequential writing, too. However, the four-disk RAID0 is even worse: it is only half as fast as the single disk. The RAID10 arrays behave in a curious way. First, their performance fluctuates somewhat on large data blocks. And second, the degraded array is a little faster than the original one. We can suppose that the degraded array just does not have to wait for a write confirmation from the failed disk, but this can hardly have such a big effect.

The eight-disk arrays have the same and quite high sequential write speed. The controller has enough performance for every disk, and the RAID6 does not differ much from the RAID5, just as it should. Interestingly, the degraded arrays are again somewhat faster than the original ones. This does not refer to the degraded RAID6 without two disks: its speed of writing is terribly low.

Multithreaded Read & Write Patterns

The multithreaded tests simulate a situation when there are one to four clients accessing the virtual disk at the same time, the number of outstanding requests varying from 1 to 8. The clients’ address zones do not overlap. We’ll discuss diagrams for a request queue of 1 as the most illustrative ones. When the queue is 2 or more requests long, the speed doesn’t depend much on the number of applications.

- IOMeter: Multithreaded Read RAID1+RAID10

- IOMeter: Multithreaded Read RAID5+RAID6

- IOMeter: Multithreaded Write RAID1+RAID10

- IOMeter: Multithreaded Write RAID5+RAID6

The results of reading one thread are really shocking as all the arrays, excepting the degraded checksum-based ones, deliver the same speed of about 160 MBps. We had the same thing with the Promise EX8650: the controller would reach a certain limit (it was 210 MBps then) and deliver its maximum speeds only at request queues longer than 1. You can look at the tables above and see that the 3ware controller only achieves its maximum speeds at a queue of 3 or 4 requests or longer (by the way, the Promise controller often needed an even longer queue). Thus, you should not expect this controller to deliver a speed of much higher than 150MBps when reading even a very large file.

But let’s get back to the multithreaded load. When there are two threads to be processed, the RAID0, RAID5 and RAID6 arrays slow down somewhat. The controller generally copes with multithreading well. It is only the four-disk RAID5 that has a performance hit of over 25%. The controller can parallel the load effectively on the RAID10 arrays by reading different threads from different disks in the mirrors. The resulting speed is almost two times the speed of reading one thread. Surprisingly enough, the degraded RAID5 and RAID6 arrays speed up when processing two threads, too.

When there are three data threads to be read, the slow arrays improve their standings while the RAID10 arrays get somewhat worse. Judging by the results, the RAID10 arrays seem to forget how to read from both disks in a mirror. The controller must be confused as to which disk should get two threads and which, the remaining third thread. As a result, the number of disks becomes an important factor again, the four-disk arrays being the slowest. The eight-disk RAID6 breaks this rule somewhat as it should be faster than the four-disk RAID0.

When the number of threads is increased further to four, all the four-disk arrays, excepting the RAID10, accelerate. Unfortunately, the RAID10 does not provide a performance gain: the controller must have been unable to identify the load and send two threads to each disk in a mirror.

The speeds are higher when the arrays are writing one thread, yet the maximums of speed are achieved at longer request queue depths only. The degraded RAID6 with two failed disks is expectedly poor but why is the four-disk RAID0 so slow? We don’t know. When a second write thread is added, all the arrays, save for the mentioned problematic two, speed up. The eight-disk arrays speed up greatly. It is only the four-disk RAID5 and the RAID10 arrays that have a small performance growth, but it is no wonder with RAID10: each mirror works as a single disk when performing write operations.

Take note of the good behavior of the degraded arrays: as required, the controller just does not notice that a disk has failed. Judging by the higher speeds than those of the original arrays, the controller skips the calculation of checksum if the latter is to go to the failed disk.

The speeds are somewhat lower when there are more write threads to be processed.

Web-Server, File-Server, Workstation Patterns

The controllers are tested under loads typical of servers and workstations.The names of the patterns are self-explanatory. The request queue is limited to 32 requests in the Workstation pattern. Of course, Web-Server and File-Server are nothing but general names. The former pattern emulates the load of any server that is working with read requests only whereas the latter pattern emulates a server that has to perform a certain percent of writes.

- IOMeter: File-Server

- IOMeter: Web-Server

- IOMeter: Workstation

- IOMeter: Workstation, 32GB

Under the server-like load of read requests, the RAID10 are always ahead of the RAID0 by reading from the “better” disk in a mirror.

The checksum-based arrays produce neat graphs, too. There are no surprises here.

The performance ratings are perfectly understandable. The mirror arrays are ahead. The performance of the others depends only on the number of disks in them. As the load is close to random reading, the RAID5 and RAID6 arrays are about as fast as the RAID0. The high-performance processor helps minimize the negative effect of data recovery from checksums for the degraded arrays. The resulting performance hit is comparable to just decreasing the array by one disk.

When there is a small share of writes in the load, the RAID10 are only victorious at short queue depths. The RAID0 arrays win at long queue depths.

The write requests make the RAID6 fall behind the RAID5. The performance of the RAID6 degraded by two disks slumps greatly, especially at short queue depths.

Since our performance rating formula gives higher weights to the results at short queue depths, the RAID10 are just a little worse than the same-size RAID0.

The RAID10 are again somewhat faster than the RAID0 at short queue depths but cannot oppose it at long queue depths.RAID5 is preferable to RAID6 for workstations. Calculating a second checksum is a burden that lowers the array’s performance greatly. The degraded RAID6 without two disks is very slow, being inferior to the single disk even.

The RAID10 and RAID0 now score almost the same amount of points. The checksum-based arrays cannot compete anymore: the additional operations cannot be made totally free in terms of performance. As a result, even the eight-disk RAID5 is inferior to the RAID0 and RAID10 built out of four disks.

When the test zone is limited to 32 gigabytes, workstations prefer RAID0 to mirror arrays without any limitations.There are almost no changes among the checksum-based arrays except that the degraded RAID6 without two disks feels somewhat better here.

The performance ratings confirm the obvious: the RAID0 arrays meet no competition from same-size arrays of other types.

Performance in FC-Test

For this test two 32GB partitions are created on the virtual disk of the RAID array and formatted in NTFS and then in FAT32. Then, a file-set is created on it. The file-set is then read from the array, copied within the same partition and then copied into another partition. The time taken to perform these operations is measured and the speed of the array is calculated. The Windows and Programs file-sets consist of a large number of small files whereas the other three patterns (ISO, MP3, and Install) include a few large files each.

We’d like to note that the copying test is indicative of the array’s behavior under complex load. In fact, the array is working with two threads (one for reading and one for writing) when copying files.

This test produces too much data, so we will only discuss the results of the Install, ISO and Programs patterns in NTFS which illustrate the most characteristic use of the arrays. You can use the links below to view the other results:

The RAID0 arrays are obviously the best when creating files. However, the speeds are far from what you could expect from multi-disk arrays based on fast HDDs when reading large files. If we disregard the obviously poor performance of the single disk on the LSI controller, the speed of writing should equal the number of disks multiplied by 100MBps (this is the rate at which modern HDDs can deliver data from the platter).

The four-disk RAID0 arrays have inexplicable problems with certain file-sets.

The RAID5 and RAID6 arrays are surprisingly good at writing, being only inferior to the eight-disk RAID0. The degraded RAID5 even becomes the leader. It seems to save a lot on checksum calculations. This does not refer to the degraded RAID6 without two disks, which writes very slowly.

The arrays reach the same limit we have seen in the multithreaded test. Reading is usually performed by the OS with a short queue depth. As a result, the controller proves to be faster at writing than at reading.

We see the same speed limitation at a queue depth of 1 for the checksum-based arrays, too. This limitation refers to the full arrays, though. The degraded arrays slow down as they need to recover data from checksums. It should be noted that the RAID6 (both normal and degraded by one disk) are as fast as the RAID5.

Copying within the same partition seems to be largely determined by the speed of reading. The arrays are similar in speed, the eight-disk arrays having but a small advantage.

The RAID5 are somewhat better than the RAID6 based on the same amount of disks when copying within the same partition: the read speed limitation must be combined with the ability of the RAID5 arrays to write a little bit faster than their opponents. Every degraded array, except for the RAID6 without two disks, feels good here.

There is nothing new in the Copy Far subtest: the standings are like in the previous subtest.

Performance in WinBench 99

Finally, here are data-transfer graphs recorded in WinBench 99:

And these are the data-transfer graphs of the RAID arrays built on the Promise SuperTrak EX8650 controller:

- RAID0, 4 disks

- RAID0, 8 disks

- RAID10, 4 disks

- RAID10, 8 disks

- RAID10, 8 disks minus 1

- RAID5, 4 disks

- RAID5, 8 disks

- RAID5, 8 disks minus 1

- RAID6, 8 disks

- RAID6, 8 disks minus 1

- RAID6, 8 disks minus 2

The following diagram compares the read speeds of the arrays at the beginning and end of the partitions created on them.Yes, the RAID6 gets very weak when it loses two disks. Take note of the performance of the eight-disk arrays: all of them, save for the RAID10, have identical speeds at the beginning and end, and their graphs are flat lines. And their speed is rather low. It looks like the controller indeed has a limit of maximum linear speed due to the peculiarities of its architecture. There is a bottleneck whose bandwidth is not sufficient for reading simultaneously from eight disks each of which can yield over 100MBps.

Conclusion

Summing this test session up, we can say that the new 9690SA controller series from LSI 3ware is just perfect for server systems. This controller shows very good and stable performance in IOMeter: Database, especially when writing to RAID5 and RAID6 arrays. It does very well with mirror arrays (choosing the disk that takes less time to access the requested data block and reading sequential data from both disks of a mirror) and is good at multithreaded operations.

This controller is not a good choice for achieving a high linear speed, though. Its architecture copes well with lots of diverse operations but has a top speed limitation of about 650MBps. And one more thing: if you are running a RAID6 array on this controller, avoid having two failed HDDs in it. This will be dangerous for your data (a failure of a third disk will ruin the array) and the write speed will be awfully law.

But what if you need the highest linear speed possible? Just stay tuned for our upcoming RAID controller reviews!